Beginning a model for emergent intelligent behavior

|

| A single neuron under an electron microscope |

|

| Anatomy of a Neuron |

The arena of Artificial Intelligence actually has a lot to say about the mechanisms by which intelligence might work. Early pioneers of the field examined the structures of neurons, like the one pictured above. Dendrites, which are short extensions emerging from the cell body, act as receptors of chemical-electrical inputs. The axon acts as the emitter of electrical information, where its endings are significantly further away from the cell body. When enough electrical activity builds up through simultaneous pulses to the dendrites, a signal will fire through the axon. Neurons form electrical networks through overlapping regions of dendrites and axons. But an arbitrary electrical network is not that interesting. What is the guiding principal which gives neural networks that plasticity which allows the network to mimic patterns? One of the long standing theories about how these adaptations occur was formed in 1949 by a man named Donald Hebb.

Hebbian Learning

The basic idea of Hebbian Learning is captured in the expression, "cells that fire together, wire together". When a synapse fires from an input neuron, and correlates to the firing of the output neuron, the dendrite connection to the input axon grows stronger. Inactive dendrites over time grow weaker. This provides the insight to describe a mathematical model for how connections on an artificial neural network can be updated such that any neuron naturally mimics the pattern of electrical activities at a neuron's outputs given only its inputs. Suppose you have a neural network that looks as follows:

Here, the neuron's dendrites are represented as the X inputs on the left, which form a linear combination to produce the output Y on the right, representing the axon of a neuron. The W weights represent the strength of the connection for any specific dendrite. When some pattern of X inputs are fired, the weights are updated as follows:

The basic idea of Hebbian Learning is captured in the expression, "cells that fire together, wire together". When a synapse fires from an input neuron, and correlates to the firing of the output neuron, the dendrite connection to the input axon grows stronger. Inactive dendrites over time grow weaker. This provides the insight to describe a mathematical model for how connections on an artificial neural network can be updated such that any neuron naturally mimics the pattern of electrical activities at a neuron's outputs given only its inputs. Suppose you have a neural network that looks as follows:

|

| Model of a single neuron |

This equation says the weights get stronger when the input X and the output Y are the same, and gets weaker when they are different. The N parameter is a learning rate. From our diagram, we see that the linear combination comes together at f, which is a nonlinear function which acts to normalize the range of outputs. To mimic the activity of a real neuron, usually some kind of step function is used, so that positive linear combinations produce a 1 at the output, and negative combinations produce a 0 at the output. If you were to train such a neuron with example inputs and outputs, you would eventually get a neuron that given only the inputs would predict the output that most closely matches that in its training set. This is called a Perceptron network. Such a network would be capable of accurately predicting any set of data that is linearly separable in the input space, but would by itself not be good at identifying more complex patterns.

|

| Multi-layer Perceptron |

Now suppose we take these neurons, and chain them together to form a more complicated network. Here, the network takes input impulses and maps them to several neurons, which in turn map their axons to two more output neurons. This feed forward multi-layer neural network is able to identify more complicated non-linear patterns. The math to apply Hebbian updates to the weights in this network is also more complex, as the learning starts from the output layer and propagates backwards towards the input layers.... leading to the term "Backpropagation". So with only a simple feed forward neural network, we are able to create a structure capable of identifying patterns. But the brain does so much more... it is able to store and recall memories, and learn to make decisions to its own benefit. How does this happen?

|

| Hopfield Network |

Hopfield Networks

The next piece of the puzzle are a special type of neural network known as a Hopfield network. A Hopfield network is different from a feed forward neural network because it allows for outputs from a neuron to have feedback loops to itself. As inputs are fed into such a network, the outputs loop back to the inputs, providing a new training input for the network to adapt to. This feedback repeats until the network converges on a stable solution. One of the properties of such a network is that is acts as a type of memory. If you give it an incomplete set of inputs, or if the inputs are partially garbled, it will naturally converge on the actual memory that most closely resembles the inputs it was fed. For instance, if the inputs were pixels of an image, and we only provided the left half of the image as an input, it wold naturally reproduce the right half of the image (as seen below).

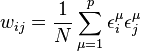

Again, there is a Hebbian learning learning rule for updating this type of network, as follows:

The next piece of the puzzle are a special type of neural network known as a Hopfield network. A Hopfield network is different from a feed forward neural network because it allows for outputs from a neuron to have feedback loops to itself. As inputs are fed into such a network, the outputs loop back to the inputs, providing a new training input for the network to adapt to. This feedback repeats until the network converges on a stable solution. One of the properties of such a network is that is acts as a type of memory. If you give it an incomplete set of inputs, or if the inputs are partially garbled, it will naturally converge on the actual memory that most closely resembles the inputs it was fed. For instance, if the inputs were pixels of an image, and we only provided the left half of the image as an input, it wold naturally reproduce the right half of the image (as seen below).

|

| Hopfield network memory recovery from partial input |

which says that when you have P neurons that fully feed back into themselves, the weight between neuron i and j can be calculated as the sum of the inputs Xi, Xj for each of the training examples.

What Next?

So we now have a basis of understanding for how neurons form networks, and how the networks can adapt their connections through Hebbian learning to allow the network to build pattern recognizers. We have a mechanism for the network to store memories in an auto-associative fashion. But we have a problem here... how does the brain learn good from bad behaviors? Imagine you started walking towards a wall. Eventually you reach this wall, but failing to stop, you bonk your head. A pattern recognizer, trained to recognize the circumstance, may be capable of recognizing that when you reach the wall, your head will hurt.. but it wouldn't care one way or the other about the outcome it predicted. Artificial intelligence typically prescribes that a teacher separates good behaviors from bad behaviors, and trains the neural network to avoid bad behaviors. I believe this to be an incomplete explanation, as our brains do not have the luxury of a teacher to predefine all good and bad experiences for us. This is an emergent property of the mind. What I would like to explore is what mechanisms could exist to produce this emergent property, and come to a hypothesis of how intelligent behavior is formed.

Stay tuned for more to come...

Comments

Post a Comment